![]() STMicroelectronics ja Amazon Web Services ovat yhdistäneet voimansa luodakseen koneoppimissovelluksen äänitapahtumien havaitsemiseen, jota ST-kumppaniohjelmaan kuuluva LACROIX aikoo käyttää älykaupungeissa. ST- ja AWS-tekniikoiden yhdistelmä avaa uusia mahdollisuuksia koneoppimissovellusten luomiseen reunalla.

STMicroelectronics ja Amazon Web Services ovat yhdistäneet voimansa luodakseen koneoppimissovelluksen äänitapahtumien havaitsemiseen, jota ST-kumppaniohjelmaan kuuluva LACROIX aikoo käyttää älykaupungeissa. ST- ja AWS-tekniikoiden yhdistelmä avaa uusia mahdollisuuksia koneoppimissovellusten luomiseen reunalla.

Ratkaisu käyttää ST Model Zoosta löytyvää Audio Event Detection -mallia, joka on otettu käyttöön Discovery Kit for IoT -solmulle STM32U5-mikro-ohjainsarjan kanssa. Saumattoman pilviyhteyden varmistamiseksi se käyttää laajennuspakettia, joka integroi FreeRTOS:n AWS IoT Coren kanssa ja arkkitehtuuri tukee koko MLOps-prosessia. Itse asiassa koneoppimispino vastaa tietojen käsittelystä, mallin koulutuksesta ja arvioinnista, kun taas IoT-pino hoitaa automaattisen laitteen vilkkumisen OTA-päivitysten kanssa. Se varmistaa, että kaikissa laitteissa on uusimmat laiteohjelmiston tietoturvakorjaukset.

Liukuhihnapino hallitsee CI/CD (Continuous Integration / Continuous Delivery) -työnkulun organisointia. Tämä on kriittinen konsepti kehityksessä ja toiminnassa (DevOps), koska se varmistaa, että kehittäjät päivittävät aina työnsä, optimoivat koodinsa ja parantavat sovelluksiaan. Neuroverkkojen kanssa työskennellessä kyky säätää järjestelmää parantaakseen sen tarkkuutta on ensiarvoisen tärkeää. Näin ollen kehittäjät automatisoivat ML- ja IoT-pinojen käyttöönoton, kun he hallitsevat ratkaisunsa koko kehityselinkaaria.

Lopuksi laitteiden valvontaan ja tietojen visualisointiin Amazon Grafanaa käytettiin luomaan dynaamisia ja interaktiivisia kojetauluja reaaliaikaista seurantaa ja analysointia varten. Sen jälkeen, kun ST julkaisi STM32Cube.AI:n vuonna 2019, teollisuus on selvästi siirtymässä kohti tekoälyn laajempaa käyttöönottoa mikro-ohjaimissa kaikenlaisissa sovelluksissa. Tässä tapauksessa kehittäjät käyttävät Yamnet-äänen luokittelumallin versiota, joka on optimoitu STM32 MCU:ille äänitapahtumien tunnistusohjelman suorittamiseen. Neuraaliverkon harjoittamisen jälkeen järjestelmä pystyy erottamaan monenlaiset äänet koirien haukkumisesta jonkun huutamiseen, kolariäänistä ja paljon muuta.

Artikkeli kokonaisuudessaan alla. Se on ilmestynyt ETNdigi-lehden numerossa 1/2024. Lehteä pääset lukemaan täällä.

BOARD FARM BRINGS MACHINE LEARNING TO THE EDGE

STMicroelectronics and Amazon Web Services have joined forces to create a machine-learning application for audio event detection that LACROIX, a member of the ST Partner Program, is looking to use in smart cities. The combination of ST and AWS technologies opens new possibilities for creation of machine-learning applications at the edge.

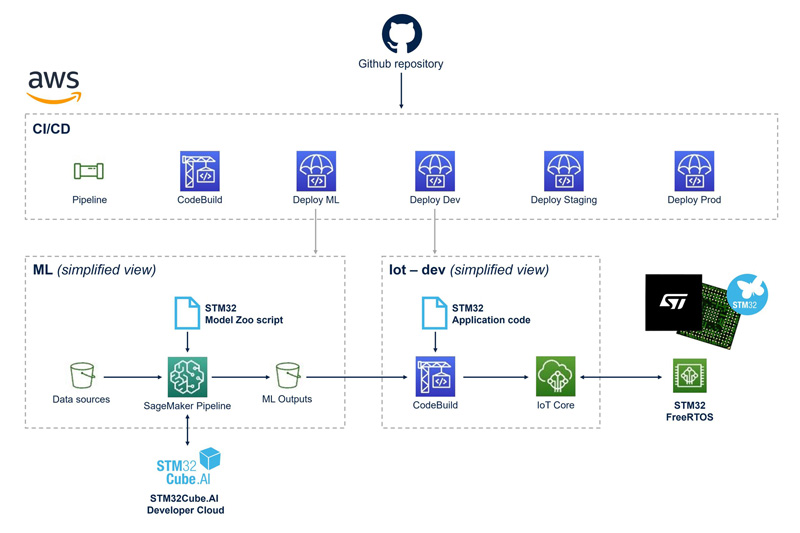

The solution uses an Audio Event Detection model found in the ST Model Zoo deployed on a Discovery kit for IoT node with the STM32U5 microcontroller series. To ensure seamless cloud connectivity, it utilizes an extension pack that integrates FreeRTOS with AWS IoT Core and the architecture supports the entire MLOps process. Indeed, the machine-learning stack is responsible for data processing, model training, and evaluation, while the IoT stack handles automatic device flashing with OTA updates. It ensures that all devices are running the latest firmware security patches.

The pipeline stack manages the orchestration of the CI/CD (Continuous Integration / Continuous Delivery) workflow. This is a critical concept in development and operations (DevOps) as it ensures developers always update their work, optimize their code, and improve their applications. When working with neural networks, the ability to tweak a system to improve its accuracy is paramount.Consequently, developers automate the deployment of the ML and IoT stacks as they manage the entire development lifecycle of their solution.

Finally, for device monitoring and data visualization, Amazon Grafana was used to create dynamic and interactive dashboards for real-time monitoring and analysis. Since ST launched its STM32Cube.AI in 2019, the industry is clearly moving toward greater adoption of AI on microcontrollers for all sorts of applications. In this instance, developers use a version of the Yamnet audio classification model optimized for the STM32 MCUs to run an audio event detection program. After training the neural network, the system can distinguish between a wide range of noises, from dogs barking to someone shouting, sound of a car crash, and many more.

What did it take to make it happen today?

X-CUBE-AWS

Even before delving into the machine-learning aspect of the solution, the fact that ST released the X-CUBE-AWS extension pack was one of the reasons that brought AWS to work with the STM32U5 IoT Discovery Kit. Indeed, the software package contains a reference integration of FreeRTOS™ with AWS IoT that easily and securely connects to the AWS cloud. Developers can thus spend time on the AI itself rather than figure out how to manage the secure connectivity to a remote server. ST even provides a reference implementation based on STM32Cube tools and software, which will further simplify IoT designs leveraging the rest of the STM32 ecosystem.

STM32 AI MODEL ZOO AND BOARD FARM

The STM32 AI Model Zoo and STM32 Board Farm are two features launched with the STM32Cube.AI Developer Cloud. Indeed, by using the audio model from the ST Model Zoo, developers already benefit from an optimized algorithm. Teams working on a final product will further prune their neural network and increase performance. However, when trying to gauge their needs, perform a feasibility study on a microcontroller, or come up with a proof-of-concept, engineers can trust the version in ST Model Zoo will run efficiently on STM32 devices.

The STM32 Board Farm, accessible through the STM32Cube.AI Developer Cloud online platform, enables integrators to benchmark their neural network on a wide range of STM32 boards to determine their best price-per-performance ratio immediately. Before the Board Farm, developers bought boards and compiled a separate application for each device, making the process cumbersome and time-consuming. With the STM32Cube.AI Developer Cloud, users upload the algorithm and run it on real hardware boards hosted in the cloud. The solution shows them inference times, memory requirements, and optimized mode topology on various STM32 microcontrollers. Hence, beyond the AWS STM32 ML at the Edge Accelerator, this is a new way of approaching AI on MCUs to make the process more straightforward.

An overview of AWS STM32 ML at the Edge Accelerator.

AWS ECOSYSTEM

The new demo code on GitHub will resonate with many teams because it uses various Amazon technologies. For instance, thanks to AWS SageMaker, developers can retrain a neural network more easily to take advantage of a new dataset. Similarly, the AWS Cloud Development Kit will help deploy new firmware to the existing fleet, while Grafana provides a dashboard and analytical tools for real-time monitoring. Amazon presented the AWS STM32 ML at the Edge Accelerator at its AWS re:Invent conference showing how the ST-Amazon collaboration can help the industry create and deploy powerful edge AI systems.

FUTURE APPLICATIONS - SMART CITIES CARRY SO MUCH POTENTIAL

The AWS STM32 ML at the Edge Accelerator is a symbolic first step for ST as the company plans to release additional AI application examples that take advantage of the Amazon ecosystem, looking at traditional neural networks with concrete applications. For instance, the ST developers are studying hand-posture detection using a time-of-flight sensor.

Instead of using image recognition, a device like ST’s VL53L5 time-of-flight sensor and its 64 different zones could enable a machine learning algorithm that detects if a thumb is up or down, thus creating a new way to interact with a computer system while decreasing costs since time-of-flight sensors are cheaper and require fewer resources than a camera sensor.

The interest from LACROIX also shows why the AWS STM32 ML at the Edge Accelerator is breaking ground in the industry. There’s already research trying to use microphones and machine learning to create better smart-city infrastructures. There are studies examining how a neural network and an off-the-shelf microphone could replace a significantly more expensive phonometer to track noise pollution in urban environments. Audio capture could also help track vehicular or pedestrian traffic to better monitor a city’s activity.

Virtaamamittaus on monissa laitteissa kriittinen mutta usein ongelmallinen toiminto. Perinteiset mekaaniset anturit kuluvat ja jäävät sokeiksi pienille virtausnopeuksille. Ultraäänitekniikkaan perustuvat valmiit moduulit tarjoavat nyt tarkan, huoltovapaan ja helposti integroitavan vaihtoehdon niin kuluttaja- kuin teollisuussovelluksiin.

Virtaamamittaus on monissa laitteissa kriittinen mutta usein ongelmallinen toiminto. Perinteiset mekaaniset anturit kuluvat ja jäävät sokeiksi pienille virtausnopeuksille. Ultraäänitekniikkaan perustuvat valmiit moduulit tarjoavat nyt tarkan, huoltovapaan ja helposti integroitavan vaihtoehdon niin kuluttaja- kuin teollisuussovelluksiin.